Cloud Infrastructure Deployment: Benefits, Challenges, and Best Practices for Modern Businesses

Sponsored Ads

When I first explored cloud infrastructure deployment, I realized just how much it transforms the way we build and scale digital solutions. Gone are the days of wrestling with physical servers or worrying about hardware limitations. Now I can spin up resources in minutes and focus on what matters—delivering value fast.

Cloud infrastructure lets me adapt to changing demands without breaking a sweat. Whether I’m launching a new app or expanding an existing platform, the flexibility and reliability of the cloud make everything run more smoothly. It’s not just about saving time—it’s about unlocking new possibilities for innovation and growth.

What Is Cloud Infrastructure Deployment?

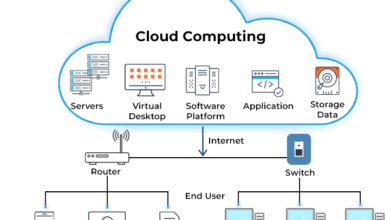

Cloud infrastructure deployment is the process of setting up and configuring computing resources, like servers, storage, networking, and software, using cloud computing platforms. Instead of purchasing and installing physical hardware in an on-site data center, I utilize cloud services from providers such as Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform. These services allow me to provision resources on demand. This gives me control over how much computing power or storage I need without the upfront costs or long-term commitments.

Deploying infrastructure in the cloud means I am leveraging virtualization technology. Virtualization lets multiple applications and operating systems run on a single physical machine by creating virtual versions of servers or storage devices. This not only saves physical space but also increases resource efficiency. Typically, I interact with these resources using a web-based dashboard, command-line tools, or APIs, enabling me to automate deployment and management tasks.

There are several deployment models. Public cloud services provide resources over the internet and are shared among multiple users. Private clouds offer dedicated resources for a single organization, often for enhanced security or compliance needs. Hybrid clouds combine both, letting me balance between control and flexibility.

Cloud infrastructure deployment usually involves a few key steps:

- Selecting a cloud provider and the types of services needed.

- Configuring virtual machines, networks, and storage.

- Setting up security groups and access controls.

- Automating deployment with tools like Terraform, CloudFormation, or Ansible.

This process enables me to scale resources up or down with a few clicks or automated rules, allowing me to respond quickly to changes in traffic or workload. I don’t have to worry about hardware failures, since cloud providers typically ensure redundancy and high availability as part of their service. This makes it much easier and faster for me to deploy websites, applications, and back-end services at any scale.

Key Features of Cloud Infrastructure Deployment

Deploying infrastructure in the cloud unlocks a powerful range of features that make modern digital solutions more practical and future-proof. Here are the aspects I find most critical when evaluating cloud infrastructure for any organization.

Scalability and Flexibility

Cloud platforms enable me to instantly scale computing resources up or down based on current traffic or business needs. For example, during a product launch or seasonal usage spike, I can add more server instances in minutes and scale them down just as quickly when demand drops. This elasticity means I do not pay for unused resources, and I am always prepared for unexpected growth or shifts in activity without manual intervention.

Automation and Orchestration

Automation tools play a central role in cloud deployment. With technologies like Infrastructure as Code (IaC), I can set up and manage servers and networks programmatically, streamlining the process. This helps me avoid manual errors and allows me to deploy repeatable configurations every time. Orchestration platforms like Kubernetes and AWS CloudFormation simplifying the process further by managing the lifecycle of applications across multiple environments, reducing my operational workload and improving reliability.

Security and Compliance

Security is built into the core of reputable cloud services. I benefit from features such as identity and access management (IAM), encryption both at rest and in transit, firewalls, and continuous monitoring. Many providers also offer compliance certifications for standards, including GDPR, HIPAA, and SOC 2. These help me meet strict industry regulations and protect sensitive data without having to build everything from scratch.

Cost Efficiency

A key reason I favor cloud deployment is the pay-as-you-go pricing model. Instead of large upfront capital expenditure on physical servers, I pay only for the resources I use. Automatic scaling means I avoid over-provisioning, and cost analysis tools from cloud providers enable me to track spending in real-time. This makes it easier for me to manage budgets and optimize resource usage, especially in growing organizations where requirements often change rapidly.

Integration with Existing Systems

Modern cloud infrastructure is designed to integrate seamlessly with legacy systems and third-party tools. Using APIs, connectors, and hybrid deployment models, I can extend my on-premises applications to the cloud or enable data sharing between different environments. This significantly reduces the friction and downtime associated with traditional migrations, allowing me to adopt the cloud without abandoning familiar systems that are still valuable.

Advantages of Cloud Infrastructure Deployment

Deploying infrastructure in the cloud brings several practical advantages that I notice every day as I manage technology projects. One of the biggest benefits is scalability. I can quickly adjust resources based on real-time demand. For example, when user traffic spikes during a major event, I can scale up my servers to maintain performance without any manual intervention. When the event is over, I scale down and only pay for the resources I used.

Another advantage is reduced capital expense. With cloud infrastructure, I avoid the need to purchase physical servers and networking equipment. I pay as I go and can track my usage through detailed dashboards. This means I can start small, test ideas, and scale successful ones without the risk of spending too much upfront.

Cloud platforms also boost my team’s productivity. Automation tools, such as Infrastructure as Code, enable us to deploy, update, or roll back environments using scripts instead of manual configurations. What used to take days now often takes minutes. This velocity helps me focus more on building features and less on repetitive tasks.

Reliability and high availability come built into most major cloud providers. I can deploy my applications across multiple data centers and regions. If one region experiences downtime, my users are automatically routed to another, minimizing disruption.

Security and compliance are no longer afterthoughts. Cloud infrastructure comes with managed firewalls, encryption, and compliance certifications. Features like role-based access controls and activity logs keep my data secure and make audits easier to handle.

Finally, integration is straightforward. I can connect cloud services to my current on-premises systems or other cloud-based tools using Application Programming Interfaces (APIs). This flexibility enables me to create hybrid solutions that best suit my business needs without requiring extensive customization.

Advantages Overview

| Advantage | Description | Example |

|---|---|---|

| Scalability | Instantly add or remove resources as needed | Scale servers during a product launch and reduce afterward |

| Cost Efficiency | Pay only for what I use and avoid upfront hardware investments | Monthly cloud bills instead of one-time server purchases |

| Automation & Speed | Rapid deployment and updates using scripts and orchestration tools | Deploy new environments with Terraform in minutes |

| Reliability & Availability | Built-in redundancy and global regions ensure my applications stay online | Load balancing apps across three AWS regions |

| Security & Compliance | Managed security features and compliance-ready environments | Enable encryption and audit logging with a few clicks |

| Easy Integration | Seamlessly link cloud resources with existing software using standard APIs | Connect CRM to analytics tools through the Google Cloud Platform |

Disadvantages of Cloud Infrastructure Deployment

While cloud infrastructure deployment offers numerous benefits, I have identified several disadvantages that are crucial to consider before committing to a migration or new project.

Potential for Higher Long-term Costs

Cloud providers advertise cost efficiency, but in my experience, the pay-as-you-go pricing model can lead to unexpectedly high bills. Usage spikes, overprovisioning, or leaving resources running can cause expenses to scale quickly. For organizations with predictable workloads, traditional on-premise hardware is more economical over time.

Vendor Lock-in and Limited Flexibility

Relying on a single cloud vendor often means using proprietary tools and application programming interfaces (APIs). I have seen firsthand how this can make switching providers a complex and expensive process. Migrating workloads between clouds or back to on-premises environments can require significant code rewrites and re-architecture, which adds time and cost.

Security and Compliance Concerns

Although cloud vendors offer strong security features, I cannot ignore the risk of misconfiguration or data exposure. The shared responsibility model requires me to handle various aspects of data protection myself, including proper identity management and access controls. Compliance with strict industry regulations can be more complex when sensitive data is stored on third-party infrastructure, especially if data residency requirements are in place.

Downtime and Outage Risks

No cloud provider offers 100% uptime. I have experienced service outages and regional failures that disrupted operations. High-availability setups can help, but they may require advanced architecture and incur increased costs—businesses with a very low tolerance for downtime need to factor this risk into their disaster recovery planning.

Limited Customization and Control

Cloud environments often restrict some hardware and software options. While managed services speed up deployment, I sometimes need legacy system support or custom configurations that are not available in public cloud platforms. Control over updates and specific security tools may also be limited compared to on-premises systems.

Performance Variability

Shared cloud resources can lead to unpredictable latency or fluctuating performance, particularly during peak usage periods. When running workloads that require consistent high performance, such as large databases or real-time analytics, I have encountered challenges that are less common with dedicated in-house hardware.

Data Transfer and Bandwidth Costs

Moving large amounts of data in and out of the cloud can become expensive. I have encountered unexpected egress fees that have impacted ongoing project budgets. For data-intensive applications where frequent transfers are unavoidable, these charges add up quickly and are often overlooked during planning.

Table: Common Disadvantages at a Glance

| Disadvantage | Example or Impact |

|---|---|

| Higher Long-term Costs | Unexpected charges from resource overuse or constant scaling |

| Vendor Lock-in | Difficulty migrating or integrating across multiple providers |

| Security and Compliance Risks | Increased risk of breaches if misconfigured, challenges with data residency requirements |

| Downtime Risks | Outages lead to service disruptions, even with top-tier providers |

| Limited Customization | Inability to support certain legacy apps or unique hardware needs |

| Performance Variability | Latency spikes, inconsistent speeds under heavy global workload |

| Data Transfer and Bandwidth | High costs for moving large datasets across cloud and on-premises locations |

Performance and Reliability

When I deploy infrastructure in the cloud, I notice significant improvements in both performance and reliability compared to traditional on-premises environments. The leading cloud providers—AWS, Azure, and Google Cloud—invest heavily in global networks with high-speed connectivity, low-latency data centers, and advanced hardware. This investment pays off when applications require consistent and fast response times. With features like auto-scaling, my platforms can handle sudden spikes in traffic by automatically allocating more resources on demand. This means my services remain stable even during traffic surges or seasonal events.

I benefit from multi-region and multi-availability zone setups that are built into most cloud platforms. These architectures minimize downtime by replicating critical workloads across different physical locations, so if an outage occurs in one region, my applications can fail over to another without users being aware of the issue. Cloud providers post uptime guarantees—many promise 99.9% or higher—and their Service Level Agreements (SLAs) are a testament to their commitment.

Here is how some major providers compare in uptime commitments:

| Cloud Provider | Published SLA Uptime (%) |

|---|---|

| AWS | 99.99 |

| Microsoft Azure | 99.95–99.99 |

| Google Cloud | 99.95–99.99 |

I have found that cloud resources are monitored 24/7, with automated health checks and rapid failover mechanisms. For resource-intensive workloads, I can choose specific instance types optimized for CPU, memory, or storage performance. But I have also encountered situations where shared resources can introduce “noisy neighbor” problems—when another tenant’s heavy usage temporarily affects my performance. Cloud vendors attempt to address this by providing isolated environments, such as dedicated or reserved instances; however, opting for these usually increases costs.

One other key advantage is the ability to deploy quickly and roll out changes or updates without lengthy downtime. With rolling updates and blue-green deployments, I can minimize disruptions and maintain high service availability. However, performance can still fluctuate during heavy regional demand or in less mature markets where data center support is more limited. In these cases, proper load balancing and Content Delivery Network (CDN) integration are essential for maintaining speed and reliability for my users worldwide.

For most of my workloads, cloud infrastructure deployment delivers robust performance and reliability out of the box. I appreciate the extensive redundancy, monitoring, and disaster recovery features, but I am always mindful of potential performance limitations during peak loads or in edge cases. Choosing the right configuration and monitoring usage trends are essential steps to optimize cloud infrastructure performance.

User Experience and Ease of Management

When I manage cloud infrastructure deployments, I notice a clear emphasis on user-friendly interfaces and streamlined management tools. Most major cloud providers offer intuitive web-based dashboards that let me monitor resource usage, set up virtual machines, and manage storage with just a few clicks. These portals come with clean layouts, real-time alerts, and guided workflows that help reduce the learning curve for new users.

Role-based access control (RBAC) is another usability strength I rely on. RBAC enables me to assign granular permissions based on team roles, thereby enhancing both security and collaboration. For added convenience, features like single sign-on and two-factor authentication are often available to protect sensitive data without complicating daily operations.

Automation dramatically enhances the ease of management. Tools such as Infrastructure as Code (IaC) let me define and manage infrastructure using simple configuration files. For example, I can use AWS CloudFormation or Terraform to deploy, update, or roll back entire environments with predictable outcomes. This reduces manual intervention and the risk of errors, especially in large-scale or multi-region deployments.

Integration with monitoring tools is usually seamless. Cloud dashboards often include built-in metrics for CPU utilization, memory consumption, and network health. I can set automated policies for scaling resources, receiving alerts when thresholds are met, or triggering self-healing actions during incidents. This level of observability accelerates troubleshooting and ensures optimal uptime.

Managing cloud deployments from a mobile device is now possible. Provider mobile apps enable me to check the real-time status or take quick actions while on the go. This flexibility is invaluable for teams that require round-the-clock oversight without being tied to a desk.

Compared to traditional on-premise environments or legacy systems, the difference in day-to-day management is significant. While on-premise solutions demand extensive manual oversight and maintenance, cloud platforms emphasize automation, clear reporting, and simplified interfaces. However, feature abundance can sometimes result in complex menus or steep learning curves for new users, and switching between different cloud providers requires adaptation due to the unique toolsets and terminology associated with each.

Overall, my experiences with cloud infrastructure deployment reflect a strong focus on usability, streamlined workflows, and advanced automation, which collectively reduce operational friction and enable organizations to shift their focus from routine management to strategic improvement.

Deployment Process Overview

Deploying cloud infrastructure follows a structured process that ensures smooth setup and ongoing management of resources. Each stage plays a crucial role in building a resilient digital environment tailored to business needs.

Planning and Preparation

I always start with a thorough assessment of my requirements. This involves identifying the desired workload types, estimating resource needs, and outlining security or compliance obligations. I map dependencies between applications and consider existing IT investments that should integrate with the cloud. Effective planning helps me avoid costly mistakes in the future and ensures the architecture aligns with both technical and business objectives.

Selecting a Cloud Provider

Choosing the right cloud provider depends on several factors. I compare service portfolios, global data center locations, uptime guarantees, and the availability of managed services, such as databases or AI tools. Cost structures also play a significant role. For instance, AWS offers a vast range of services with flexible pricing, whereas Google Cloud focuses on its strengths in data analytics and machine learning. Ease of migration, support options, and compliance certifications also significantly influence my decision-making process.

Configuration and Provisioning

Once I settle on a provider, I configure and provision resources through the provider’s portal, command-line tools, or Infrastructure as Code (IaC) tools, such as Terraform or AWS CloudFormation. Here I set up virtual machines, storage, and networking components. I also implement security controls, utilizing features such as identity and access management, as well as encryption. Automation is key—I often use scripts or templates to speed up deployments and ensure consistency across environments.

Monitoring and Maintenance

After deployment, continuous monitoring keeps everything running smoothly. I rely on integrated dashboards and alerts to track performance, resource utilization, and potential security threats. Cloud providers offer tools like AWS CloudWatch or Azure Monitor, which allow me to automate health checks and receive actionable insights in real-time. Maintenance tasks—such as updating instances, patching software, or adjusting resources during traffic spikes—are easier with automated scaling features and robust notification systems. This approach minimizes downtime and keeps operational overhead low.

Comparison with Traditional Infrastructure

When I compare cloud infrastructure deployment to traditional on-premises infrastructure, several distinct differences become apparent. One of the most noticeable is the way resources are provisioned and managed. With cloud platforms like AWS or Azure, I can spin up servers, storage, or networking resources in minutes using a web-based console or automation scripts. Traditional infrastructure relies on physically setting up hardware in a data center, which can take days or even weeks. This time difference greatly impacts project timelines and flexibility.

Another key comparison is scalability. In the cloud, scaling up or down is as simple as adjusting parameters or using automation tools—there’s no need for bulk hardware purchases or lengthy installation periods. Traditional infrastructure often requires over-provisioning to handle peak loads, which results in unused capacity and higher capital expenses. With a cloud model, I only pay for what I use, which helps me control costs and avoid waste.

The maintenance burden is also very different. Traditional setups require manual updates, hardware repairs, and constant monitoring. In the cloud, most maintenance tasks are automated and managed by the provider. Security patches, backup schedules, and disaster recovery are handled behind the scenes, letting my team concentrate on innovation instead of routine upkeep.

Cost structure marks another major contrast. With traditional infrastructure, I face significant upfront investments in servers, networking equipment, and storage. These costs are sunk, regardless of whether my resource needs change. Cloud deployment utilizes a pay-as-you-go model, ensuring expenses align closely with actual usage. However, I do watch out for unexpected charges from resource overuse or data transfers—a challenge unique to cloud billing.

Security and compliance obligations remain important in both models. Traditional infrastructure provides me with more direct control over physical devices, which some organizations prefer for handling sensitive data. The cloud offers strong built-in security and compliance features, but I have to trust my provider’s safeguards and ensure proper configuration on my end.

Performance consistency can also differ. With dedicated physical servers, I generally avoid resource contention issues. In a cloud environment, the “noisy neighbor” effect, where multiple users share underlying hardware, can sometimes lead to unpredictable performance. Leading cloud providers have largely mitigated this through advanced resource management, but occasional fluctuations are still possible.

Finally, disaster recovery options are much stronger in the cloud. Setting up backup data centers or off-site storage is costly and complex with traditional infrastructure. Cloud services enable me to replicate data across regions, ensuring high availability and resilience at a fraction of the time and cost.

Below is a quick comparison table highlighting the fundamental differences:

| Aspect | Cloud Infrastructure | Traditional Infrastructure |

|---|---|---|

| Provisioning Speed | Minutes via automation | Days or weeks with manual setup |

| Scalability | On-demand and flexible | Fixed and slow, requires hardware |

| Cost Model | Ongoing, pay-as-you-go | Large upfront CAPEX, ongoing OPEX |

| Maintenance | Mostly automated | Mostly manual |

| Security Control | Provider-managed, some user config | Direct and granular control |

| Performance | High but variable | Consistent but limited by hardware |

| Disaster Recovery | Integrated, multi-region | Requires manual planning and extra sites |

These differences shape the decision-making process for organizations evaluating cloud vs. traditional deployments. Each model has its strengths, but the agility and automation of cloud deployment frequently deliver greater long-term benefits for digital projects.

Alternatives to Leading Cloud Deployment Solutions

When considering cloud infrastructure deployment, most businesses default to well-established providers such as AWS, Microsoft Azure, and Google Cloud Platform. However, various alternative solutions cater to specific needs or offer unique advantages. These alternatives are especially appealing for organizations seeking greater customization, cost savings, or specialized features.

Open Source and Self-Hosted Platforms

Self-hosted cloud infrastructure is ideal for teams with strong DevOps skills who want maximum data control. Platforms such as OpenStack and CloudStack allow me to build private or hybrid clouds within my own data center or on rented hardware. These platforms are highly customizable, offering complete flexibility in resource allocation and network configurations. They require more hands-on management, but the tradeoff is total control over security and compliance. For organizations with strict regulatory requirements or those seeking to avoid vendor lock-in, open-source solutions make a compelling case.

Niche and Regional Cloud Providers

Not every project benefits from the global scale of hyperscalers. Niche providers such as DigitalOcean, Linode, and Vultr primarily target developers and small businesses. I appreciate their straightforward pricing, beginner-friendly dashboards, and focus on simplicity. DigitalOcean’s “droplets” make it easy to spin up virtual machines quickly, while Linode and Vultr excel at straightforward cloud hosting with transparent costs. For businesses operating under strict regional data regulations, regional providers—such as OVHcloud in Europe or Alibaba Cloud in Asia—offer local data residency and compliance certifications.

Managed Hosting and Bare-Metal Services

Some workloads require dedicated resources for performance or compliance. Providers like Hetzner, OVHcloud, and IBM Cloud offer bare-metal servers with cloud-like management options. These solutions combine the predictability and performance of traditional hardware with the flexibility of cloud billing and automation tools. Managed hosting options include integrated backup, monitoring, and security, relieving me of day-to-day maintenance while still offering granular control when needed.

Edge and Hybrid Solutions

Hybrid cloud platforms, such as VMware Cloud and Nutanix, enable me to manage workloads across on-premises infrastructure and cloud services. This approach is useful when low-latency data processing or compliance requirements make traditional cloud impractical. Edge computing providers, such as Cloudflare Workers or Akamai Edge, enable me to process data closer to users, improving application speed and reliability for globally distributed users.

Feature and Cost Comparison Table

| Solution Type | Strengths | Weaknesses | Typical Use Cases |

|---|---|---|---|

| OpenStack, CloudStack | Customization, security, compliance | Complex setup, high management overhead | Private cloud |

| DigitalOcean, Linode | Simplicity, cost clarity, support | Limited advanced features | Web apps, SMBs |

| OVHcloud, Hetzner | Bare-metal performance, EU compliance | Fewer SaaS integrations | Regulated industries |

| VMware Cloud, Nutanix | Hybrid flexibility, legacy integration | Higher cost, vendor lock-in | Enterprise & hybrid use |

| Cloudflare Workers, Akamai | Edge performance, global reach | Limited resource types | Latency-sensitive apps |

Choosing an alternative to the major cloud providers depends on the specific balance between control, cost, and convenience you need. I evaluate projects based on their performance requirements, compliance factors, and resource scalability to pick the most suitable deployment solution outside the big three. Each of these alternatives has distinct advantages, especially for specialized projects or when seeking to optimize cost and control.

Hands-on Experience with Cloud Infrastructure Deployment

Setting up cloud infrastructure for the first time was both exciting and eye-opening. I began by selecting Amazon Web Services (AWS) as my cloud provider. Their web-based console streamlined resource provisioning—within minutes, I spun up virtual machines, storage buckets, and databases. The ease of scaling resources was a surprise to me. During a simulated traffic spike, I used AWS Auto Scaling groups to add instances, and the platform automatically managed the load. This process took a fraction of the time compared to configuring new hardware in a traditional environment.

The security setup felt comprehensive but required meticulous attention to detail. Using Identity and Access Management (IAM) roles, I created granular permissions for each service and user. Multi-factor authentication added another layer of protection. Automated backup and monitoring tools, such as CloudWatch, make it simple to set alerts for performance anomalies and react to resource limits in real-time.

For automation, I leveraged Infrastructure as Code (IaC) using AWS CloudFormation templates. Defining the entire infrastructure stack in code made deployments repeatable and error-free. I can quickly roll back to previous configurations if something breaks, saving the hours I used to spend on manual troubleshooting.

When I tried Microsoft Azure for another project, I found its portal similarly intuitive. Azure Resource Manager and its template system provided an alternate approach to automation. Integration with development tools, along with built-in cost management dashboards, helped me stay on budget and optimize use.

One challenge I noticed was performance variability during peak times, especially on shared resources. While most of my workloads ran as expected, I occasionally experienced slower response times when running large-scale batch processes. I learned to mitigate this by using dedicated instances and regional placement to isolate critical workloads.

I appreciated how cloud platforms handled maintenance. Routine patching and updates were managed by the provider, unlike my previous experience with on-premises servers, which required constant manual updates. However, keeping up with frequent service updates and new features in the cloud required me to invest time in ongoing learning and development.

For compliance-heavy environments, I experimented with hybrid deployments using both public cloud resources and on-premises hardware. Setting up secure VPNs and network peering allowed me to keep sensitive data local while harnessing cloud scalability for less sensitive workloads. The transition was smooth, utilizing the built-in migration tools provided by both AWS and Azure.

In all deployments, cost tracking became crucial. I utilized the built-in billing dashboards and set up budget alerts to prevent unexpected charges. Despite pay-as-you-go pricing, unused resources or misconfigured services could quickly drive up monthly bills if left unchecked.

These hands-on experiences cemented my appreciation for the flexibility and speed of cloud infrastructure deployment. While the learning curve was real—especially with automated tools and security best practices—the payoff in agility, reliability, and reduced operational overhead was immediate and tangible.

Final Verdict

Cloud infrastructure deployment has completely changed the way I approach building and scaling digital projects. The flexibility and speed it offers let me focus on innovation rather than worrying about hardware or manual maintenance. While there are challenges to consider—like managing costs and ensuring security—the benefits far outweigh the drawbacks for most organizations.

With the right planning and tools, cloud deployment unlocks opportunities for growth and efficiency that aren’t possible with traditional setups. For anyone looking to modernize their IT strategy, embracing the cloud is a move I wouldn’t hesitate to recommend.

Frequently Asked Questions

What is cloud infrastructure deployment?

Cloud infrastructure deployment refers to setting up and configuring servers, storage, networking, and software using cloud platforms like AWS, Azure, or Google Cloud. This enables organizations to access computing resources on demand, eliminating the need for physical hardware and upfront costs.

What are the main benefits of cloud infrastructure deployment?

Key benefits include scalability, flexibility, cost savings through pay-as-you-go pricing, faster deployment times, reliable uptime, robust security features, and easier integration with existing systems. Cloud deployment also reduces the need for manual maintenance, allowing teams to focus on innovation.

What types of cloud deployment models are available?

There are three main types: public cloud, private cloud, and hybrid cloud. Third-party providers manage public clouds, private clouds are hosted on dedicated infrastructure, and hybrid clouds combine both to strike a balance between flexibility and control.

How is cloud infrastructure different from traditional on-premises infrastructure?

Cloud infrastructure is managed by external providers and accessed over the internet, offering on-demand scalability and automated maintenance. Traditional infrastructure requires buying and managing physical hardware locally, resulting in higher upfront costs and manual upkeep.

How does virtualization support cloud infrastructure?

Virtualization allows multiple virtual machines to run on a single physical server, maximizing resource efficiency and minimizing hardware requirements. This technology is fundamental to cloud computing, enabling flexible resource allocation and easier scaling.

What are the key steps in deploying cloud infrastructure?

Key steps include assessing requirements, selecting a cloud provider, configuring resources, establishing security, automating deployment (often using Infrastructure as Code), and implementing continuous monitoring for performance and security.

What security features are available in cloud infrastructure?

Cloud providers offer built-in security such as encryption, identity and access management, firewalls, compliance certifications, and automated monitoring. Role-based access control (RBAC) and multi-factor authentication further enhance protection.

Can cloud infrastructure help with compliance requirements?

Yes. Major cloud providers offer tools and certifications to help organizations meet industry regulations, such as GDPR, HIPAA, and PCI DSS, making it easier for them to remain compliant and secure sensitive data.

Are there any disadvantages to deploying cloud infrastructure?

Potential drawbacks include higher long-term costs due to unmonitored usage, potential vendor lock-in, security and compliance challenges, limited customization options, performance variability during peak times, and high data transfer fees.

How reliable is cloud infrastructure compared to traditional setups?

Cloud infrastructure is typically more reliable, with providers offering high uptime Service Level Agreements (SLAs), automatic failover, multi-region availability, and robust monitoring. However, occasional outages can still occur and should be considered when planning.

What role does automation play in cloud infrastructure management?

Automation tools, such as Infrastructure as Code (IaC) and orchestration platforms, streamline deployment, configuration, and scaling, thereby reducing manual workload and operational errors while improving consistency and speed.

Is it easy to integrate cloud infrastructure with existing systems?

Yes. Most cloud platforms offer APIs and integration tools, making it straightforward to connect cloud services with on-premises systems, enabling hybrid environments and smooth transitions.

How does the cost model of cloud infrastructure work?

Cloud infrastructure uses a pay-as-you-go model, where you only pay for the resources you use. This reduces upfront investments but requires careful monitoring to avoid unexpected charges from resource overuse.

What are some alternatives to major cloud providers like AWS and Azure?

Alternatives include open-source platforms (e.g., OpenStack), managed hosting, bare-metal servers, niche providers (e.g., DigitalOcean), and hybrid or edge solutions, each offering unique features that cater to specific business needs.

What are the main challenges when migrating to cloud infrastructure?

Challenges include managing costs, ensuring data security and compliance, adapting legacy applications, avoiding vendor lock-in, and handling potential performance variability. Planning and ongoing management are essential for a smooth migration.

How can organizations ensure high performance in the cloud?

To maintain high performance, organizations should use auto-scaling, load balancing, Content Delivery Networks (CDNs), monitoring tools, and properly configure resources based on workload needs. Regular optimization is key for reliable service.